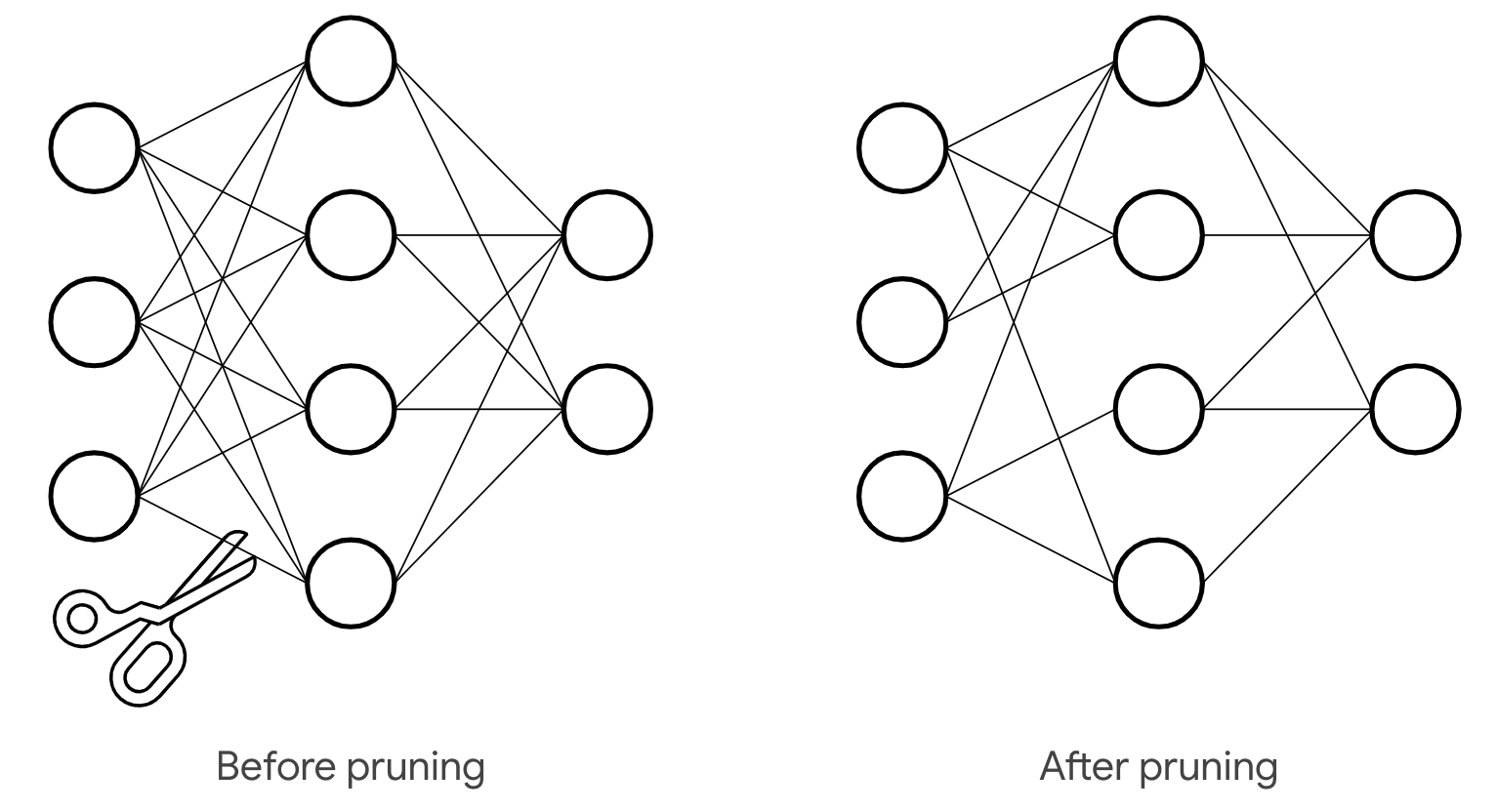

Pruning

Prune large networks to reduce the impact of over-parameterization

Context: Over-parameterization or the presence of redundant parameters that do not contribute much to the output of the model is a common problem in deep neural networks, especially in architectures like CNN. It is a widely believed reason for why a neural network may fit all training labels.

Problem: Over-parameterization can cause increase in size of the networks. More parameters can also increase the number of computation, and memory accesses. The increased computational load can cause increased power consumption.

Solution: Network pruning is a strategy involving the removal of non-critical or redundant neurons from the neural network to reduce its size with minimal effect on the performance. Use of pruning on deep learning models can be an effective strategy to improve energy efficiency as seen in some of the existing work. The pruned model improves energy efficiency during inferencing by reducing the bandwidth and power requirements due to the reduced number of FLOPs (floating point operations). Due to reduced size, pruned models also reduce the memory requirements of the network.

Example: Consider an object detection model using an R-CNN model that has a high accuracy. But due to its large size that result in large number of computations, inferencing a single image takes a long time. The process also consumes higher energy due to the large number of computations. Pruning the model appropriately would cut down the redundant computations resulting in both faster inferencing and lesser energy consumption. The example is based on this post.

- https://stackoverflow.com/questions/57395645/

- https://stackoverflow.com/questions/66231467/

- https://stackoverflow.com/questions/52064450/

- https://stackoverflow.com/questions/36645799/

- https://stackoverflow.com/questions/41701783/

- https://stackoverflow.com/questions/57197914/

- https://stackoverflow.com/questions/66090385/

- https://stackoverflow.com/questions/66090385/

- https://stackoverflow.com/questions/67009335/

- https://stackoverflow.com/questions/67243218/

- https://stackoverflow.com/questions/54781966/

- https://stackoverflow.com/questions/63687033/

- https://stackoverflow.com/questions/57395645/

- https://stackoverflow.com/questions/41958566/

- https://stackoverflow.com/questions/66590044/

- https://stackoverflow.com/questions/58711222/

- https://stackoverflow.com/questions/56156646/

- https://stackoverflow.com/questions/56224426/