Efficient read-write

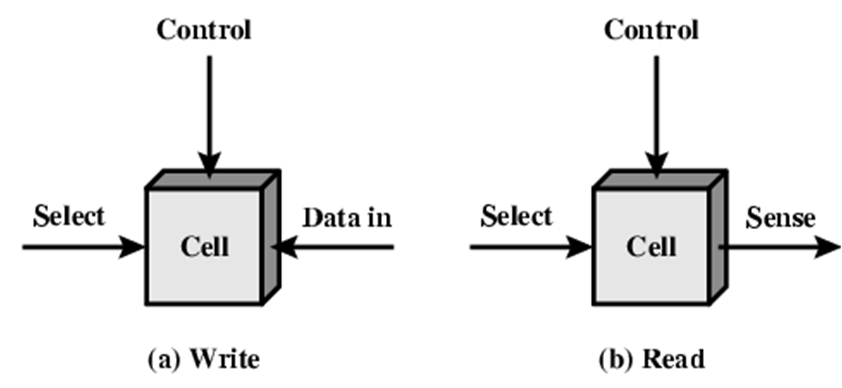

Minimize the memory footprint while performing read/write operations.

Context: Working with deep learning models require reading and writing enormous amounts of data during data cleaning, data preparation, and inferencing stages of the deep learning workflow.

Problem: Read and write operations have an influence on the energy consumption of a processor. Due to the size of data involved in deep learning, not performing them efficiently may lead to increased machine cycles, unnecessary data movements and increased memory footprint leading to increased energy consumption.

Solution: While reading and writing the data, take care to minimize the number of operations using efficient implementation methods. Avoid non essential referencing of data to reduce the memory footprint.

Example: Suppose a user wants to load a large number of images in batches to train a model in tensorflow. Loading these images in the RAM before the training requires a lot of memory to hold the images increasing the memory footprint. Instead, the user could use map function from tf.data.Dataset to have only the path to the images and load the batch of images only during the train step. Loading the data this way minimizes the memory usage required to hold all the data in the RAM. The example is based on this stack overflow post.

- https://stackoverflow.com/questions/37511148/

- https://stackoverflow.com/questions/52099863/

- https://stackoverflow.com/questions/35644264/

- https://stackoverflow.com/questions/55598516/

- https://stackoverflow.com/questions/40531543/

- https://stackoverflow.com/questions/54915054/

- https://stackoverflow.com/questions/51813951/

- https://stackoverflow.com/questions/48910590/

- https://stackoverflow.com/questions/48911249/

- https://stackoverflow.com/questions/40950590/

- https://stackoverflow.com/questions/45725053/

- https://stackoverflow.com/questions/52969867/

- https://stackoverflow.com/questions/61772307/

- https://stackoverflow.com/questions/61368378/

- https://stackoverflow.com/questions/54687497/

- https://stackoverflow.com/questions/47665314/

- https://stackoverflow.com/questions/48216772/

- https://stackoverflow.com/questions/64407272/

- https://stackoverflow.com/questions/61772307/

- https://stackoverflow.com/questions/51369763/

- https://stackoverflow.com/questions/66959820/